Autonomous Robotic Reinforcement Learning with Asynchronous Human Feedback

Abstract

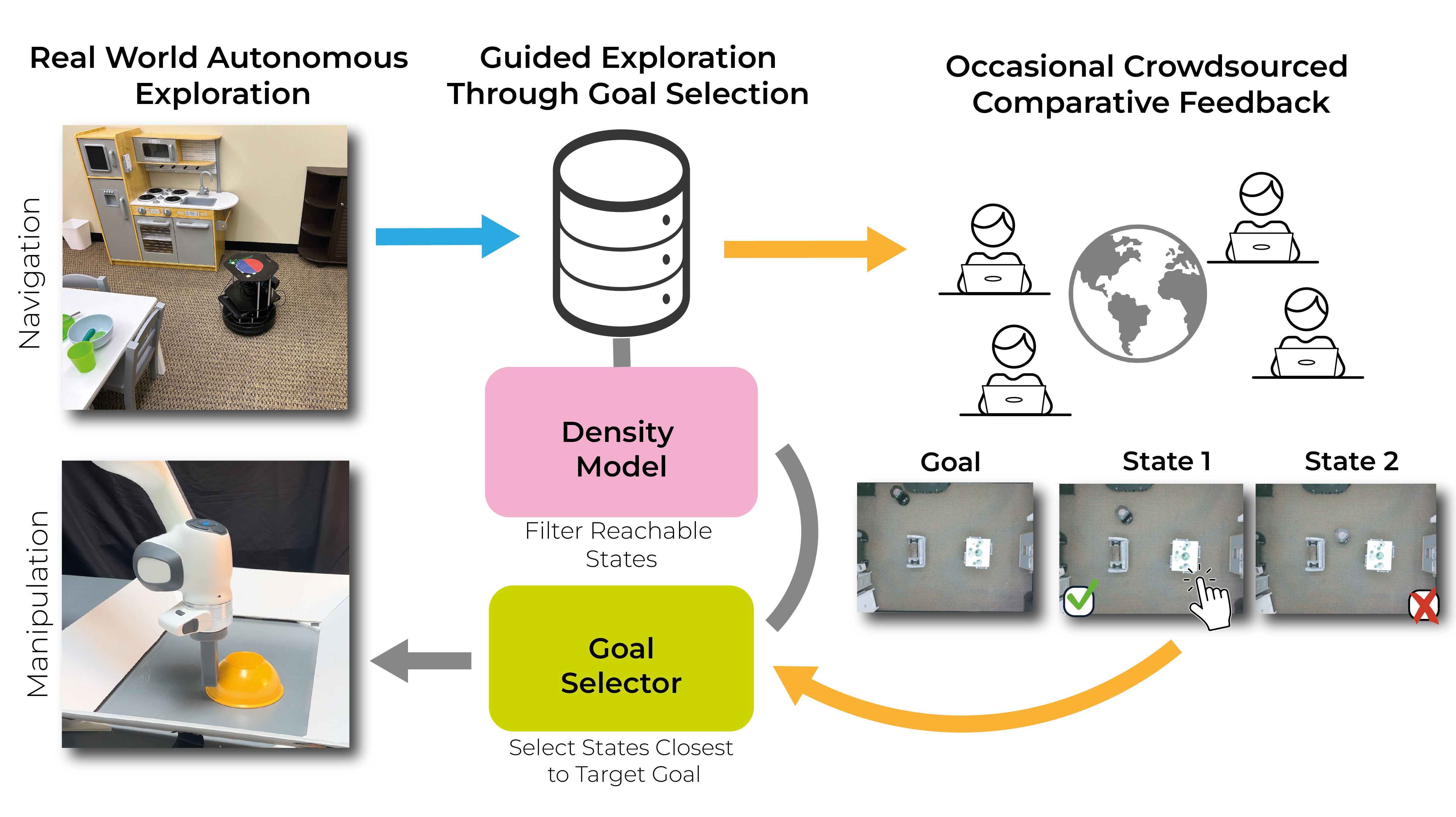

Ideally, we would place a robot in a real-world environment and leave it there improving on its own by gathering more experience autonomously. However, algorithms for autonomous robotic learning have been challenging to realize in the real world. While this has often been attributed to the challenge of sample complexity, even sample-efficient techniques are hampered by two major challenges - the difficulty of providing well ``shaped" rewards, and the difficulty of continual reset-free training. In this work, we describe a system for real-world reinforcement learning that enables agents to show continual improvement by training directly in the real world without requiring painstaking effort to hand-design reward functions or reset mechanisms. Our system leverages occasional non-expert human-in-the-loop feedback from remote users to learn informative distance functions to guide exploration while leveraging a simple self-supervised learning algorithm for goal-directed policy learning. We show that in the absence of resets, it is particularly important to account for the current "reachability" of the exploration policy when deciding which regions of the space to explore. Based on this insight, we instantiate a practical learning system - GEAR, which enables robots to simply be placed in real-world environments and left to train autonomously without interruption. The system streams robot experience to a web interface only requiring occasional asynchronous feedback from remote, crowdsourced, non-expert humans in the form of binary comparative feedback. We evaluate this system on a suite of robotic tasks in simulation and demonstrate its effectiveness at learning behaviors both in simulation and the real world.

Method

Real World Experiments

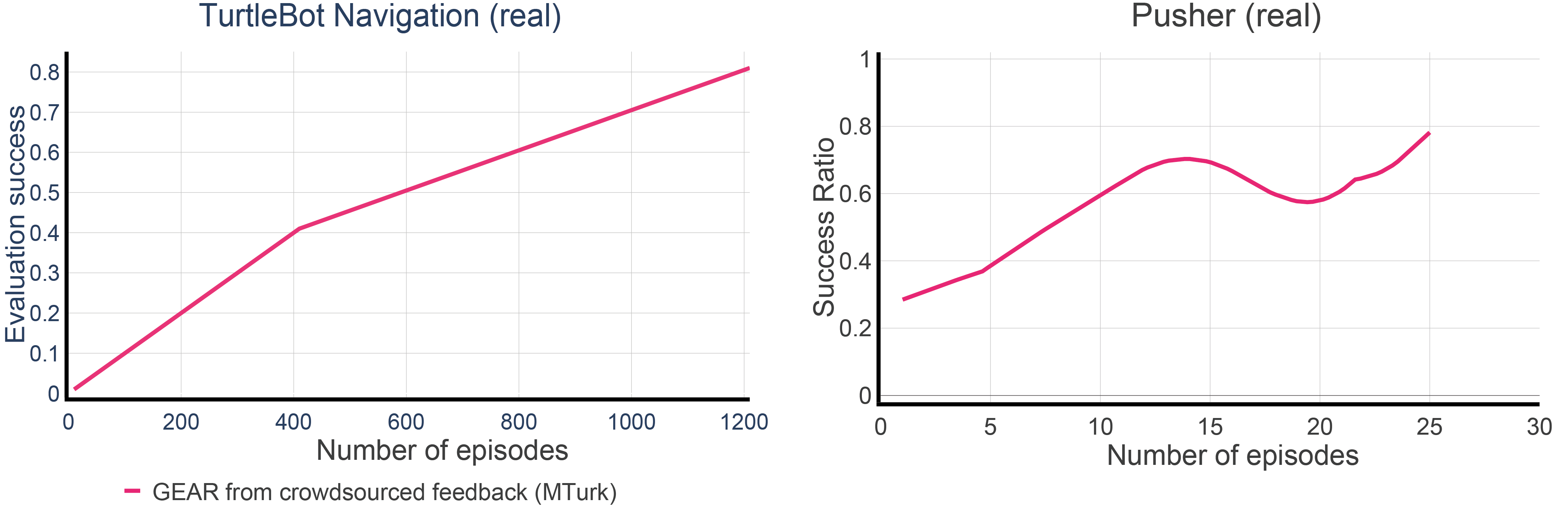

Continual uninterrupted learning

Autonomous continual learning of the franka arm learning to push the block in the real world and the mobile base learning to reach the target position.

Success ratio of GEAR when evaluating the policies on the tasks conducted in the real world.

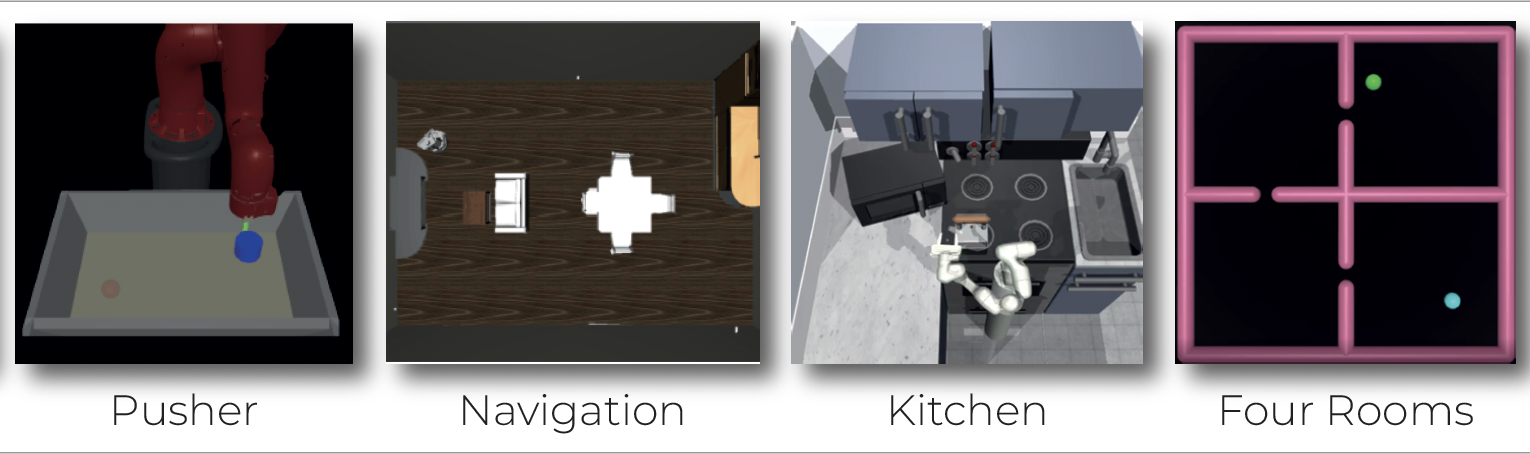

Simulation Experiments

The 4 simulation benchmarks where we test GEAR comparing it against other baselines. Kitchen, and Pusher, are manipulation tasks; Four rooms and Navigation are 2D navigation tasks

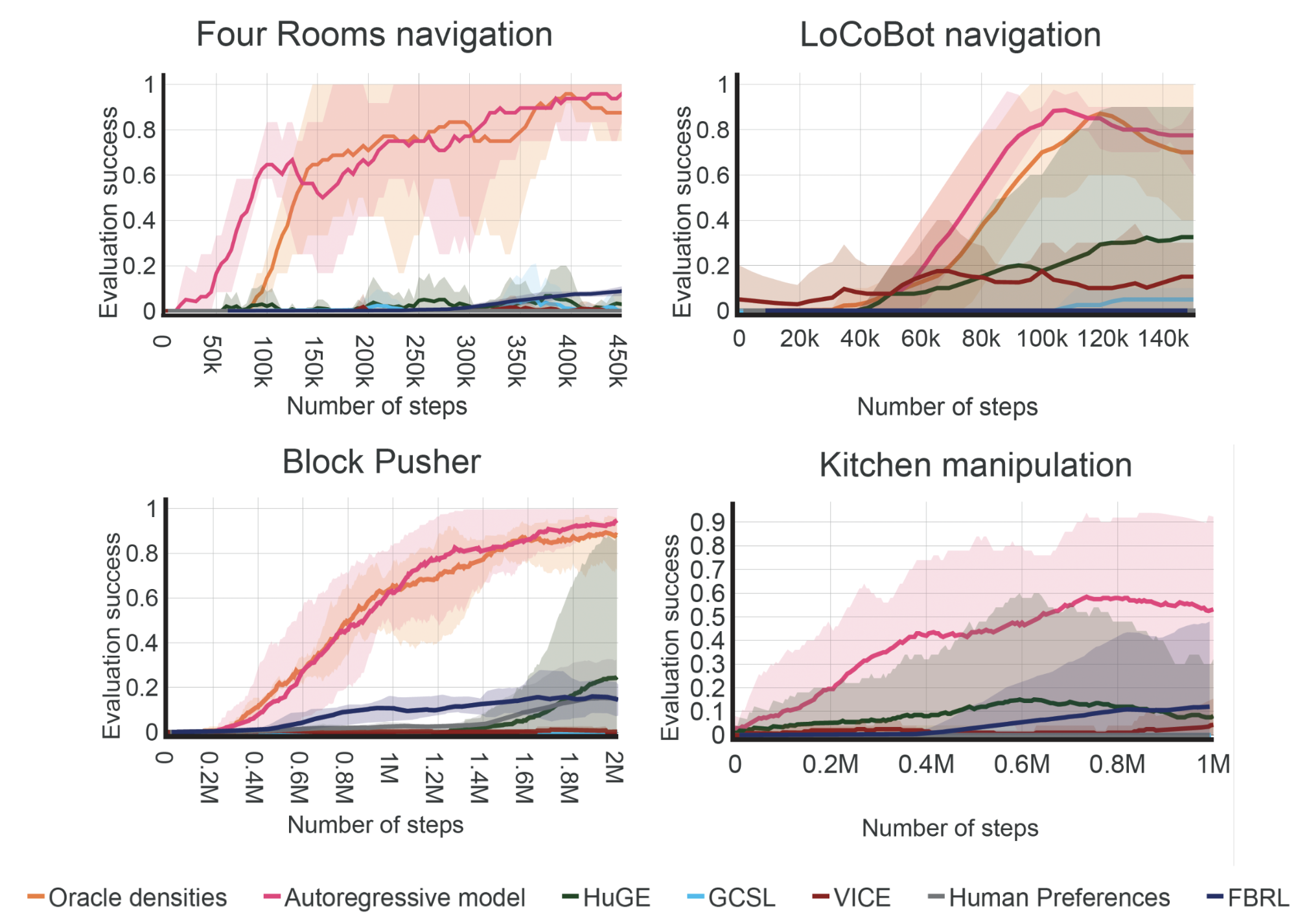

Success curves of GEAR on the proposed benchmarks compared to the baselines. GEAR outperforms the rest of the baselines, which cannot solve most of the environments. Furthermore, we see that the approach using an autoregressive model performs as well as the using oracle densities. Some curves may not seem visible because they never succeeded and hence stay at 0. Note: Due to the large observation space in the kitchen environment, GEAR could only be run with the autoregressive model (there is no oracle densities curve for that experiment). The curves are the average of 4 runs, and the shaded region corresponds to the standard deviation.

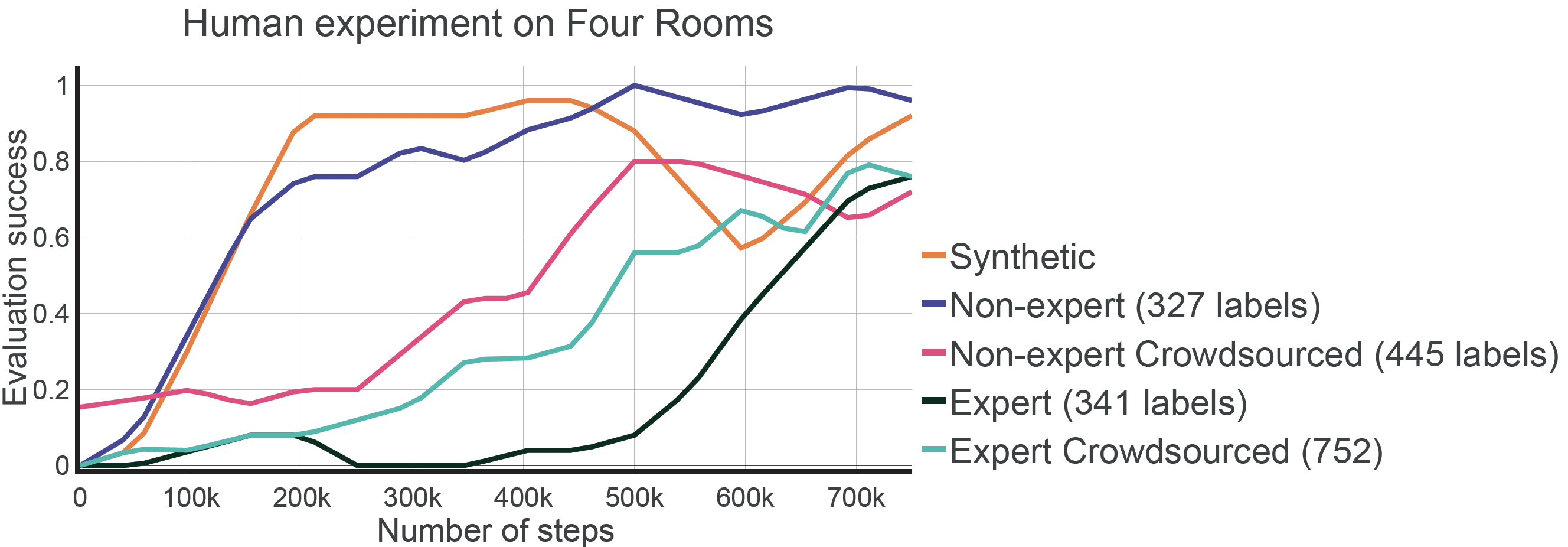

Crowdsourced Experiments

Comparing performance in Four Rooms with different types of human feedback. We see that GEAR manages to succeed with any type of annotation.

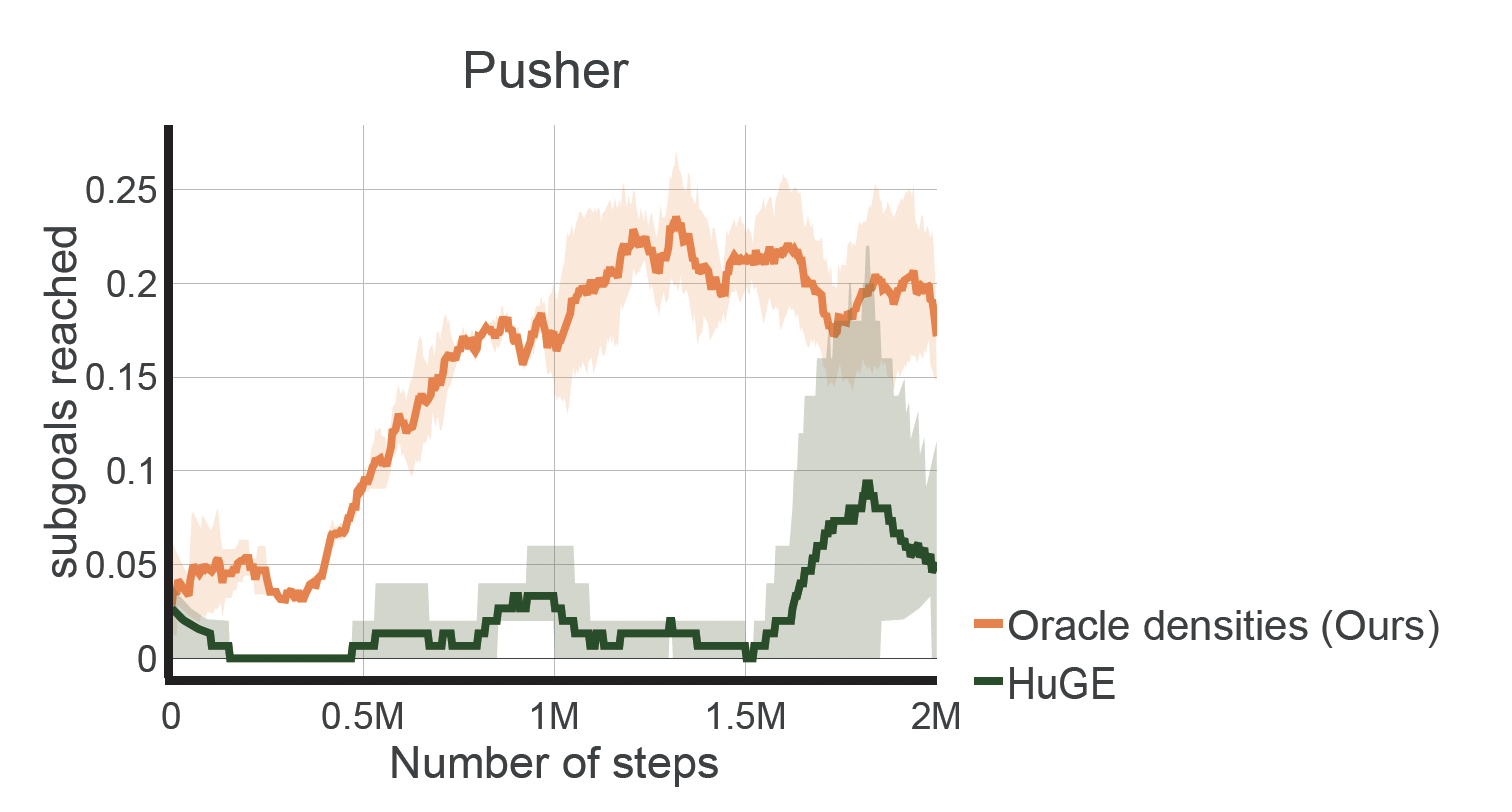

Reachability

We see that, by accounting for reachability, GEAR manages to command meaningful goals, i.e. is able to command subgoals that it knows how to reach. We see that other methods like HuGE fail to do so, which translates to poorer performance.